Years ago (probably close to 10), I remember hearing about The Singularity and sort of laughing it off as a joke, maybe an eventual possibility, but science fiction that humanity can’t fall into, because surely we are responsible enough not to let that sort of thing happen.

Humanity is Awesomely Complex

Really, we are these awesomely complicated creations that make decisions, sometimes rational, sometimes completely irrational. Sometimes we do whats right because it’s right, and sometimes we do whats right for other motivations. In weird cases, sometimes we do whats wrong because the outcome will lead us to something that’s right. And this is the complexity of the human condition (I wrote something about this in 2009).

And this complexity is precisely what makes us special and unique.

But as time drifts by, it seems that we are getting closer and closer to this “fictional” singularity event.

Fragile Uniqueness of our Existence

What makes human beings unique is actually our flaws. We are foolish creatures that err often, but then we innovate and build off them. These errors have led us to some incredible innovation. Penicillin, Viagra, Pacemakers, even plastic. I think the sticky note was too. And failure is ultimately what leads us to success.

We recognize that a mistake happened, adapt, and learn from it. And we can think creatively in ways that seem not even possible. Heck, we are on the brink of the exact technology that might over take what makes us unique, and we did not get there over night! How weird is that?

In that vain, our mistakes can have serious consequences, which we apparently haven’t figured out yet. We just keep towing the line and making more and more complex creations, and it isn’t clear we have thought out the repercussions of what these types of technologies will look like or do. Some people do, but not enough.

Ethics Committees of the Highest Caliber

Humans can’t “truly” be replicated, but maybe something could get close. I read a wild story in the Atlantic that the military is now starting to use AI to make decisions on who and what to actually destroy… which is terrifying because I am pretty certain this is how the terminator started.

According to the article:

Each year, the algorithms got a little better. Soon, some teams began integrating neural networks, a computer model meant to mimic the structure of the human brain with layers of data-processing clusters imitating neurons. By 2015, two of them—a team from Microsoft and one from Google—reported striking results: They had created algorithms, they said, that could do better than humans. While humans labeled images incorrectly 5.1 percent of the time, Google’s and Microsoft’s algorithms achieved error rates below 5 percent.

So are we that far off from this fictional reality?

Years ago at SearchLove, I presented a topic about Google algorithms.

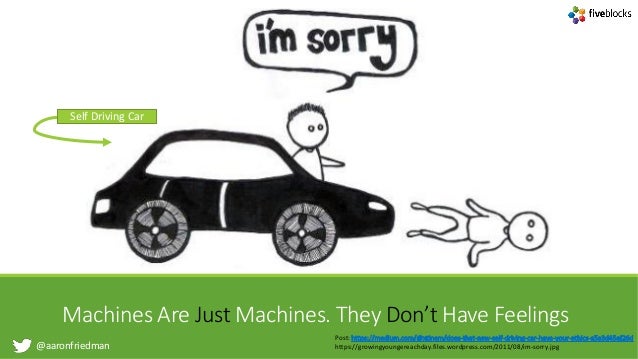

And one of the topics I touched on was how self driving cars are being put into a situation to potentially make life or death decisions. Imagine the scenario below of a self driving car that knows its about to crash, but needs to decide who to crash into, who’s life is more important than who’s? Starting to calculate the survival rates. All based on an algorithm.

Scary.

Who is deciding these things? Who should be deciding these things? Should the technologies even exist.

And even if we did make an ethics committee, who would you want on it? Who would you trust? I have trust issues when it comes to this.

AI is Learning From Us and We are Setting a Bad Example

In the not so distant future, we have developed AI that learns behavior from humans. Just like parents to children, it’s our responsibility to set a good example. When you throw garbage on the floor and your kids see it, they remember it. They learn from our behaviors.

I see AI the same way. How can you expect it to learn something that it doesn’t observe. You want it to be better than us? It will be the spitting image of us, and right now I am not sure that’s such a great thing.

We aren’t as good as we used to be and we need to do better. We are so busy bickering about nonsense, and fighting for power. Violence, fighting, lying, cheating, manipulation, screwed up governments. If aliens landed here, what the hell would they thing!

AI needs to learn from somewhere, and there is no manual for it. Religions claim there is, but we all see how that’s working out with so many of them with so many different views about life. No, it won’t be from a book. It will be from watching and interacting with us. They will learn from us, and then attempt to be better than us, cue Ex Machina.

To illustrate, I give you this scene from Flight of the Navigator.

Dear Humans of the World

We are flawed and imperfect, which is why we are amazing. But let’s get our s#*t together and set a good example for the future of humanity, and for our soon to be robot overlords, so they hopefully treat us as we would like to be treated.

Blogging Challenge status: Prepping in advance so I don’t mess up. 9 out of 12. 3 left!